Data Analytics

Optimizing Data Warehousing Solutions with Azure: In22labs' Billion-Data Challenge

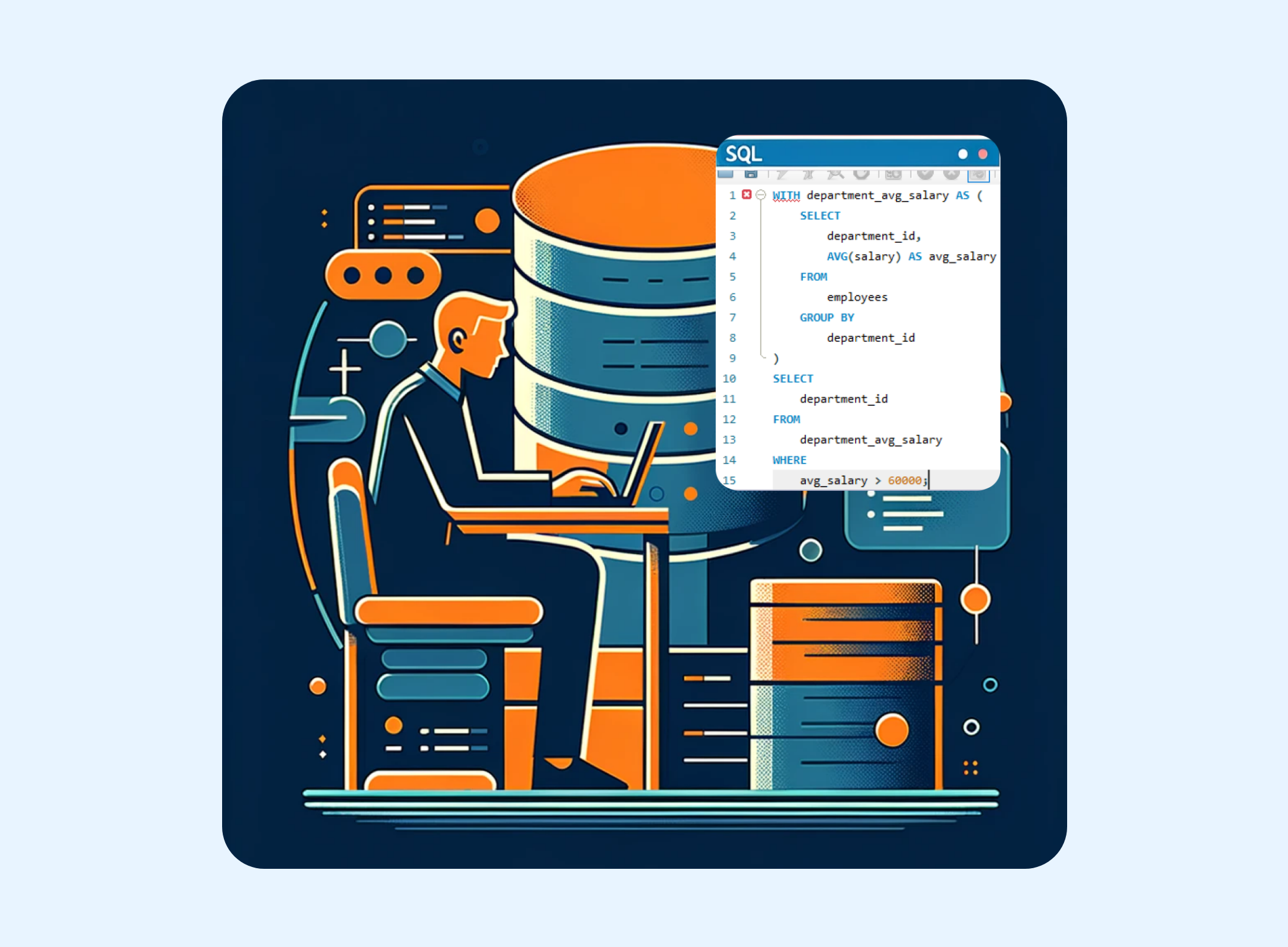

In the field of data warehousing, effectively managing enormous volumes of unique data is a difficult task. We recently worked on a project at In22labs that required managing over a billion data points, each with a unique structure. Overseeing the project, we had 1 lakh external Data Enumerators conducting surveys, and we successfully managed 12 million surveys, resulting in a substantial database exceeding 200 gigabytes. This underscores our proficiency in handling extensive datasets with precision and efficiency. This blog post offers insights into how we overcame this challenge by delving into the technical aspects of how we used Azure's ADLS Gen2 and data pipelines.

The Data Challenge

The main obstacle to our project was the enormous volume and diversity of data. We had to deal with petabytes of unstructured and structured data that came from different systems, which caused serious siloing issues. The goal was not just to store this data but to make it analytically accessible and usable.

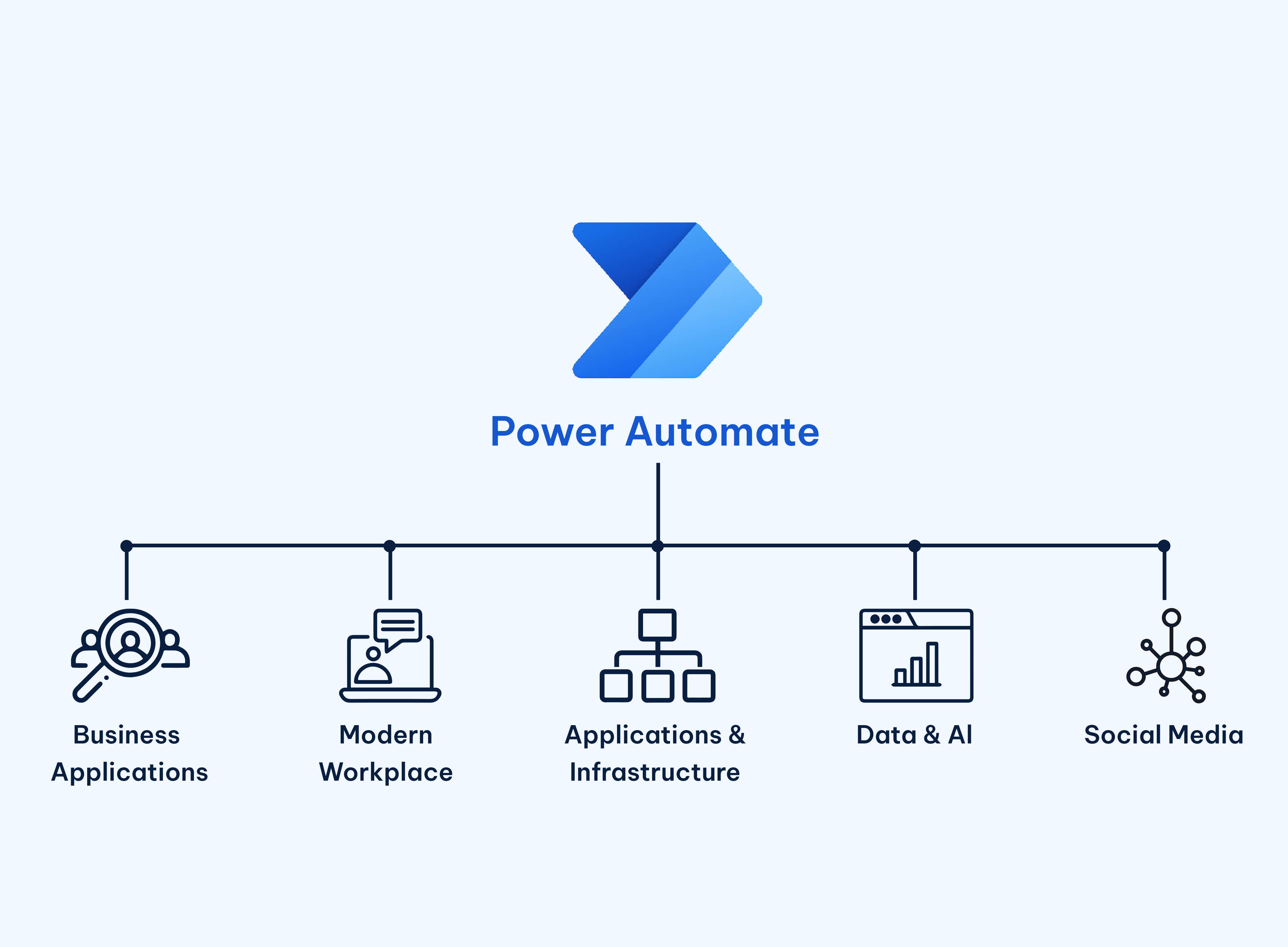

Why Azure?

Our selection was made possible by Azure's ADLS Gen2 because of its enormous scalability and sophisticated analytics features. Our choice was influenced by the following important features:

- Hierarchical namespace for effective data organization.

- The ability to integrate with Azure Data Factory for orchestration and Azure Synapse for analytics.

- Streaming data at high throughput for real-time analytics.

Implementing Azure Solutions

The implementation phase involved multiple key steps:

Data Migration: We used Azure Data Factory for bulk data movement, utilizing its copy data tool for initial large-scale migrations.

Data Lake Setup: ADLS Gen2 was configured to create a hierarchical file system, allowing for better data organization and management.

Pipeline Creation: We built automated data pipelines using Azure Data Factory, ensuring seamless data flow from ingestion to storage.

Security and Compliance: Implementing Azure's security features, like access control lists and encryption-at-rest, was crucial to protect sensitive data.

Challenges and Solutions

Throughout the implementation, we faced several challenges:

Data Ingestion at Scale: Optimizing Azure Data Factory pipelines is necessary to handle the ingestion of massive volumes of data in real-time.

Data Transformation: We were able to manage intricate data transformations thanks to Azure Synapse's strong data processing capabilities.

Performance Tuning: To maintain peak performance, we continuously inspected and adjusted our Azure services, paying particular attention to runtime optimizations for Synapse and Data Lake storage.

Results and Benefits

Post-implementation, the improvements were substantial:

- Enhanced data processing speed and efficiency.

- Scalable architecture capable of handling future data growth.

- Improved data accessibility for analytics and business intelligence.

Conclusion

This project served as evidence of Azure's ability to manage challenging, large-scale data warehousing projects. In addition to providing an immediate solution to our data challenges, our experience with Azure ADLS Gen2 and data pipelines laid the groundwork for upcoming data-driven projects.

For readers interested in the subsequent stages of data analysis, particularly data processing, we invite you to explore our companion blog “Big Data Processing with Apache Spark — the last journey through a fragmented data world”, where we delve into the intricacies of data processing within the Azure ecosystem.

Tags

- Business Insights

- Azure Data Warehouse

- Data Analysis

- Big Data Solutions

- Data Management

- Data Pipelines

- In22labsCaseStudy

- Data Engineering

- Data Security

- In22labs

- PowerBI

- Data Analytics

- e-governance

Written by

Vishnu Mithran A

Published on

24 January 2024